kopecz@its-cs1:/home/fb17/kopecz/hello_parallel> sbatch mpihello.slurm; squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

425 minijobs Hello_pa kopecz R 0:00 2 its-cs[100-101]

kopecz@its-cs1:/home/fb17/kopecz/hello_parallel>

There are a lot of possible command line options that can be specified, so have a look at the manpage of squeue. Below is an example showing some other useful information available.

kopecz@its-cs1:/home/fb17/kopecz/poisson/study4> squeue -o "%.7i %.9P %.8j %.8u %.2t %.9M %.6D %.8c %.4C %.7h %R" JOBID PARTITION NAME USER ST TIME NODES MIN_CPUS CPUS SHARED NODELIST(REASON) 8509 public poisson kopecz PD 0:00 8 8 64 no (Resources) 8587 public poisson kopecz PD 0:00 8 8 64 no (Priority) 8570 public poisson kopecz PD 0:00 8 4 32 no (Priority) 8507 public poisson kopecz PD 0:00 8 2 16 no (Priority) 8506 public poisson kopecz PD 0:00 8 1 8 no (Priority) 8434 public mpi zier R 15:26:47 1 1 12 no its-cs224 8435 public mpi2 zier R 15:26:47 1 1 12 no its-cs225 8433 public static zier R 18:50:17 1 1 12 no its-cs229

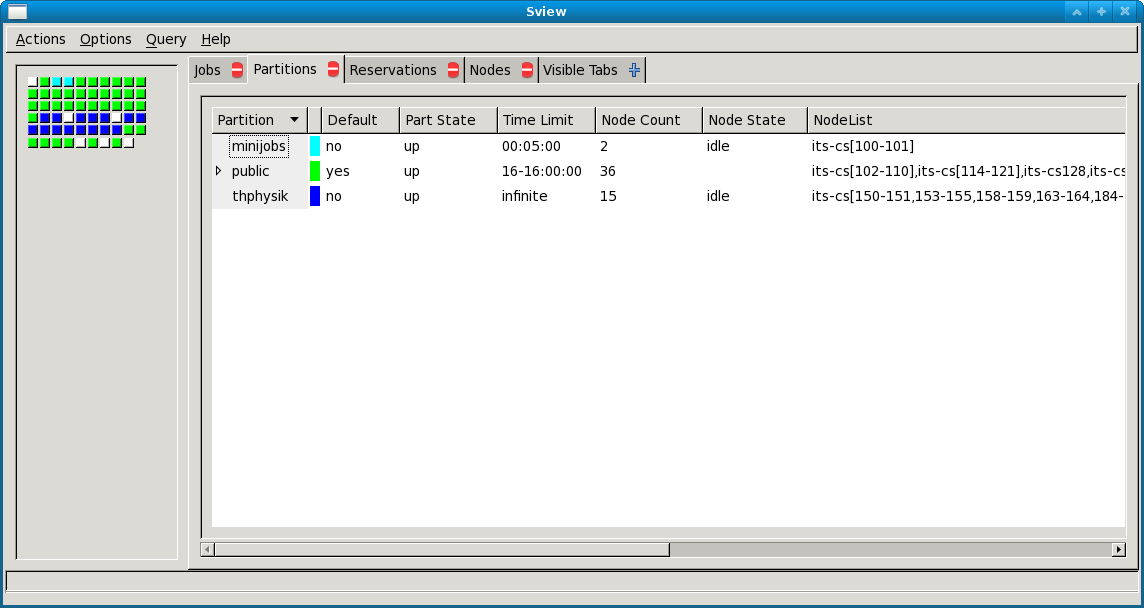

kopecz@its-cs1:/home/fb17/kopecz/hello_parallel> sinfo PARTITION AVAIL TIMELIMIT NODES STATE NODELIST thphysik up infinite 3 down* its-cs[116,182,184] thphysik up infinite 8 idle its-cs[117-120,158-159,179-180] public* up 10-00:00:0 3 down* its-cs[10,102,208] public* up 10-00:00:0 10 idle its-cs[103-105,145-146,148,219-222] minijobs up 5:00 2 idle its-cs[100-101]